Benchmarking the Social Intranet.

Of late, I’ve been crunching some numbers. My aim is simple – how to get a better sense of what adoption figures mean and how we might begin successfully benchmarking the social intranet. The inspiration for the exercise came from a call with Gia Lyons and Claire Flanagan of Jive Software and I’d like to thank them for providing the means to apply some ratios to a social business platform roll out for internal collaboration, i.e. the social intranet.

For my example I’m going to use some of the key adoption metrics generated from Jive’s Community Manager Reports (CMR) tool. These are as follows:

| Name | Description | ||||

| Active | Users who have viewed at least one item in the previous 30 days | ||||

| Participating | Users who have commented, liked, rated/voted, edited, or created content in the previous 30 days. | ||||

| Contributing | Users who have created new content (blogs, wikis, threads, video etc) in the previous 30 days | ||||

| Logged in users | Users who have logged in at least once since site creation | ||||

| Total users | All system users, excluding disabled | ||||

Whilst these are how Jive provides core reporting, they could equally well apply to IBM Connections, SharePoint, Blue Kiwi or any other social business platform.

The Social Business Adventure of Five Fictional Companies

To bring this exercise to life, I’ve created some adoption data tables for 5 very different companies.These are fictional entities constructed for this exercise but all are in sectors that I’ve engaged with in social business projects over the last 15-20 years or so , namely, technology, education, finance, media and energy. Given this, I can apply some insight into these companies but I must state that some of the adoption rates are aspirational! The companies are as follows:

American Gasoline (AG): global oil and energy company

Royal Bank of Ireland (RBI): an EU bank

Silicon Valley Circuits (SVC): a leading tech company.

Media Moguls (MM): a successful media company

University of Chiswick (UoC): a prestigious UK university

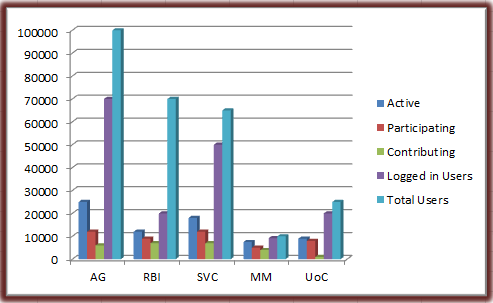

The adoption rates for these companies are as so:

| Active | Participating | Contributing | Logged in Users | Total Users | |

| AG | 25000 | 12000 | 6000 | 70000 | 100000 |

| RBI | 12000 | 9000 | 7000 | 20000 | 70000 |

| SVC | 18000 | 12000 | 7000 | 50000 | 65000 |

| MM | 7500 | 5000 | 4000 | 9200 | 10000 |

| UoC | 9000 | 8000 | 1000 | 20000 | 25000 |

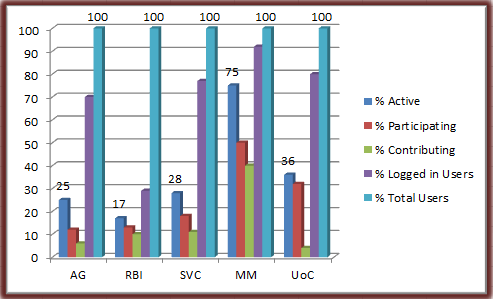

As a bar chart, this looks like this:

What are we to make of these figures, how well are each doing and how do they benchmark?

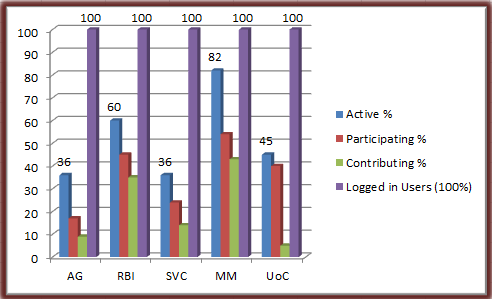

Representing Social Business Adoption as a Percentage

The first thing we need to do is turn these figures into percentages. Taking the total users in the system as 100%, we get the following table:

| % Active | % Participating | % Contributing | % Logged in Users | % Total Users | |

| AG | 25 | 12 | 6 | 70 | 100 |

| RBI | 17 | 13 | 10 | 29 | 100 |

| SVC | 28 | 18 | 11 | 77 | 100 |

| MM | 75 | 50 | 40 | 92 | 100 |

| UoC | 36 | 32 | 4 | 80 | 100 |

Benchmark 1: How many employees are using the site?

This is based based on conversations at the Social Business Council and research conducted there earlier in the year by Susan Scrupski. The basic consensus is that we should aim for getting half of our employees at least viewing material in the site in a 30 day period – Jive’s Active Users.

As we can see here, in only one of my examples, Media Moguls, has this been achieved. This is not uncommon – I comment I hear often from other people working in driving social business adoption is that getting to 50% is very difficult. In some ways it’s a crude but pretty indicative mark – is the site being at least viewed by most the employees?

Benchmark 1: a target 50% of employees viewing content in a 30 day period.

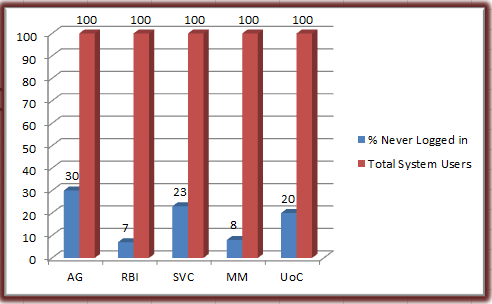

Benchmark 2: How widely is the social business platform rolled out?

This benchmark is a simple and effective measure of how widely the social business platform has been rolled out. You might well get a situation where there’s a very lively and value-creating community but where this is an enclave with the company. Here we’re looking at the total possible universe of users and how many have ever logged in. The total universe is all those who can have access – (most likely the total in Active Directory, as below). Jive’s figure here is that 90% should have logged. When we look at our communities this is how they pan out:

On this benchmark only the Royal Bank or Ireland and Media Moguls are doing well. At American Gasoline, a third of all people in the company have never logged. Clearly there’s some wider internal communications work needed here to expand the community out and to get people using the site.

Benchmark 2: 90% of employees should have logged in to the social business platform

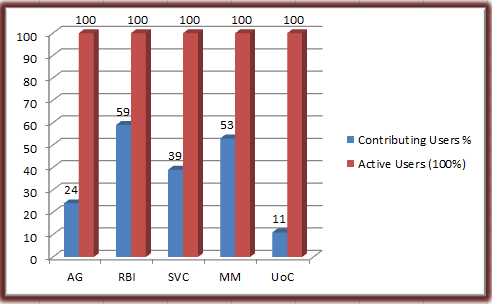

Benchmark 3: How active are communities?

The benchmark I’m going to apply measures how active the communities are. So far we have useful benchmarks on the very big picture but in order to get a clearer comparative overview of the companies, we need to apply some further measures. These will then allow us to start to benchmark their actual performance against each other in terms of the sites themselves.

What we’re trying to find out here is how active the people currently using the community actually are. The way this is calculated is take the total who have ever logged in as 100 and then calculate percentages of Active, Participating and Contributing Users against that. The % Jive think should be active here is 50% or more. This is important to separate out Logged in from Total System users, in that for many companies, the total number of system users are as likely as not being pulled in from Active Directory by an LDAP synch. As we saw above, the system users might not even know the site exists. What we need to know therefore is, of those who have logged in, how does their activity and thus the site, fare?

So how do our communities measure up?

The result is patchy. If 50% is the benchmark figure for Active Users, against those who have ever logged in, then we can see that only Media Moguls and the Royal Bank of Ireland have achieved that figure. Of the rest, the majority of people who have ever logged in have not returned in the last 30 days. And remember, Active users in Jive’s terminology is just those who have viewed something. If we’re looking at those generating new content or commenting on existing content then the figure is even lower.

Benchmark 3: the number of Active Users should be 50% or higher when measured against those who have ever logged in.

Benchmark 4: Is the site more than just somewhere to visit?

The final benchmark left me a bit incredulous at first as I thought it unrepresentative of a lot of the communities I’d seen. It certainly seemed a stretch goal at first. What this benchmark provides is a way of seeing if the site is being used by just a handful of people or whether it’s being used by a lot of people as a potentially useful business tool.

To provide more depth to this benchmark it might well be necessary to flesh it out by looking at what people are doing and whether the site is simply a document store or being used for effective collaboration. Nonetheless this is a useful measure – what we’re after seeing here is how much people many of the users are actually creating new materials rather than just viewing or commenting on existing documents and discussions. In my opinion it’s a very good measure of the health of the community and how embedded its use is in day to day activities by a wide group of people. Certainly, if a community met this benchmark then it would be a strong sign of successful and wide usage.

What this benchmark looks at is the number of people creating new content, Jive’s Contributing Users, against the number of Active Users. For Jive this figure should be 75%. When we look at our communities this is how they fare:

As we can see here, none of the communities meet this mark. Royal Bank of Ireland does best here with nearly two thirds of the community using it to generate new stuff. At the University of Chiswick however, only a tiny proportion of the community are creating new items.

Now it might be argued that a social intranet will follow the pattern of the consumer web with Altimeter’s 90:9:1 rule, showing a small proportion creating genuinely new content and the majority of users simply lurking. The difference here though is that we’re looking at a business tool. If the tool is successfully embedded into day to day business then why are the majority of users not using it to create new materials? Are they not making new stuff (unlikely) or are they making them and collaborating using e-mail? Either way, this is indicative of how people are using the site – is it a business tool or a lurker’s paradise?

Benchmark 4: the number of User creating new content (Contributing Users) should be 75% or higher, against the number of Active Users.

Conclusion

There may well be mileage in producing target ratios against the 90% we expect to have logged in or other such measures. Whilst I’m tempted to start extrapolating out more figures from my fictional companies, especially around and usage, or even providing them with working strategies to address their different challenges, I shall stop here. Maybe for future posts.

These figures are of course somewhat heavy handed (the term ‘Instrumental Reason’ comes back from my Critical Theory days) so please see Deb Lavoy’s excellent article in CMS Wire 2013 Prediction: Social Business Tech will Stop Blaming Culture for Failure for a more nuanced look at the value social business we can bring to the table rather than just the numbers.

Nonetheless I do think they provide a useful way to make sense of Jive’s Community Manager Reports and to measure the success of a social business platform against industry norms. In the future it might be possible to get anonymous feeds from Jive’s CMR of the data that could then feedback into CMR about how a community was doing. In the meantime we have Excel and calculators – (and please let me know if you spot any errors in my arithmetic! ) and at least privately, how your real communities measure up against the ones here. And also, if you have your own benchmarks to share, please do let me know.

One reply on “Benchmarking the Social Intranet”

Neat article! Very informative! It really takes time and effort to have a successful intranet. Another key concept is employee engagement. Here are some tips to enhance employee engagement (http://www.simpplr.com/blogs/2015/10/7-ways-intranets-rock-at-engaging-employees/).